Intel launches three new Xeon 6 P-Core CPUs, will debut in Nvidia DGX B300 AI systems

Three new processors take the stage

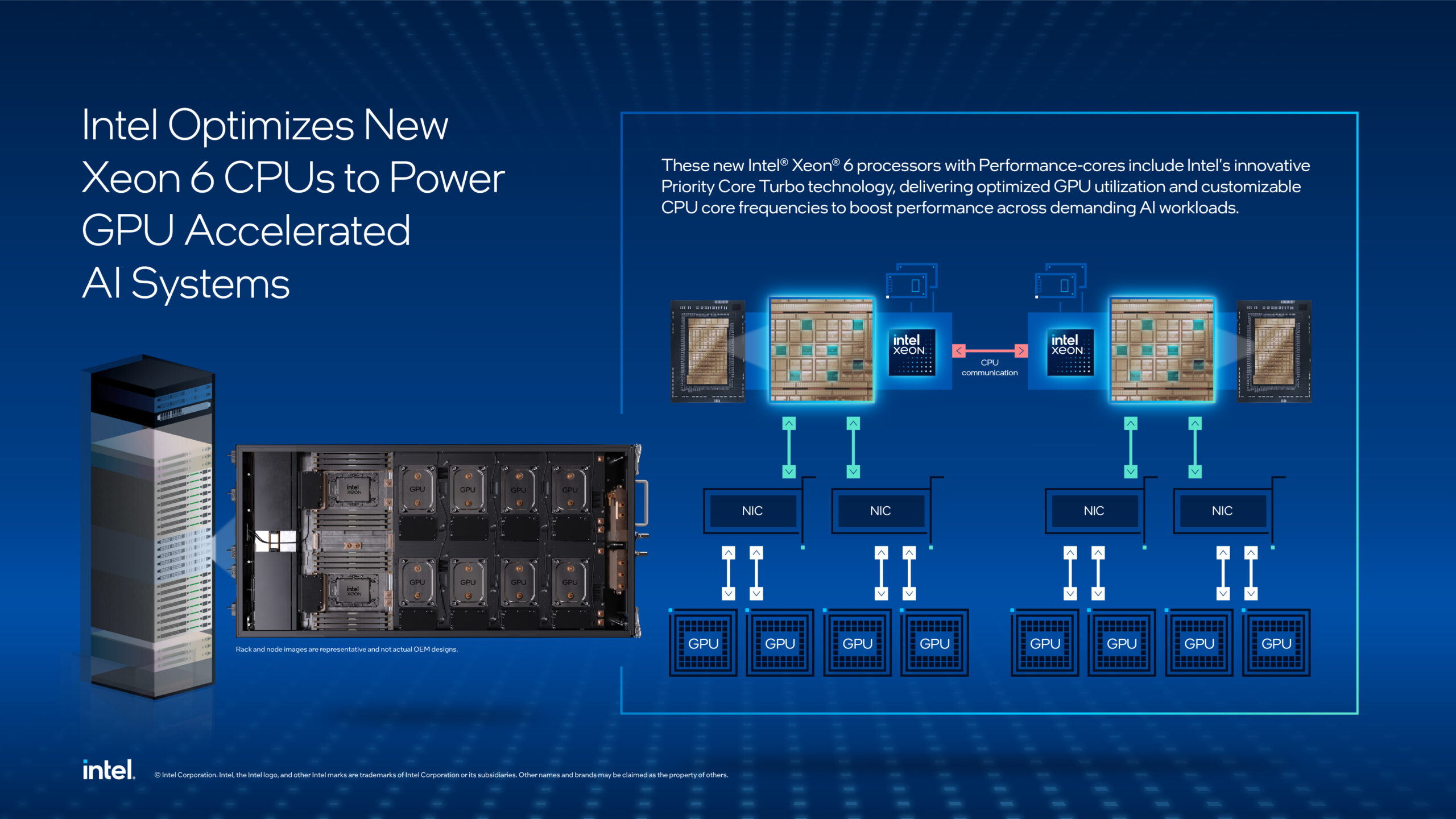

Intel has announced the unveiling of three new Intel Xeon 6 P-Core CPUs that it says are designed specifically to handle the most advanced GPU-powered AI systems. The new processors will debut in Nvidia's DGX B300 AI systems.

The new processors, replete with Intel's Performance-cores, also feature new Intel Priority Core Turbo (PCT) and Intel Speed Select Technology – Turbo Frequency, which the company claims delivers customizable CPU core frequencies to improve GPU performance for demanding AI workloads.

All three are available now, and the Intel Xeon 6776P also comes integrated in the Nvidia DGX B300, the company's latest AI-accelerated systems.

Intel says that the introduction of PCT and Intel SST-TF as a pairing marks "a significant leap forward in AI system performance." PCT should allow for the dynamic prioritization of high-priority cores, enabling higher turbo frequencies. Meanwhile, lower-priority cores operate at base frequency in parallel to optimize resource distribution. PCT can reportedly run up to eight, high-priority cores at elevated turbo frequencies, according to Intel.

Intel's Xeon 6 CPUs include up to 128 P-cores per CPU and 20% more PCIe lanes than previous-generation Xeon processors, with up to 192 PCIe lanes per 2S server. Intel also claims Xeon 6 offers 30% faster memory speeds compared to the competition (specifically the latest AMD EPYC processors), thanks to Multiplexed Rank DIMMs (MRDIMMs) and Compute Express Link, and up to 2.3x higher memory bandwidth compared to the previous generation.

Intel says its P-Core Xeon 6 processors have 2 DIMMs per channel (2DPC), and says the 2DPC configuration supports up to 8TB of system memory. It also says MRDIMMs boost bandwidth and performance, all while reducing latency. The new CPUs also feature up to 504 MB L3 cache for faster data retrieval.

Intel Xeon 6 processors also feature Intel AMX, which can offload certain tasks to the CPU. Intel confirmed AMX now features support for FP16 precision arithmetic, which enables efficient data pre-processing and critical CPU tasks in AI workloads. Alongside its three new P-Core processors, Intel has also added a B-variant 6716P-B.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Product name | Total Cores | Max Turbo Frequency | Processor Base Frequency | Cache | TDP |

Intel Xeon 6732P Processor | 32 | 4.3 GHz | 3.8 GHz | 144 MB | 350 W |

Intel Xeon 6774P Processor | 64 | 3.90 GHz | 2.50 GHz | 336 MB | 350 W |

Intel Xeon 6776P Processor | 64 | 3.90 GHz | 2.30 GHz | 336 MB | 350 W |

Intel Xeon 6716P-B Processor | 40 | 3.5 GHz | 2.5 GHz | 160 MB | 235 W |

The latter features just 40 cores and draws less power.

Intel says its Xeon 6 processors with P-cores "provide the ideal combination of performance and energy efficiency" to handle the increasing demands of AI computing.

This week, at Computex 2025, the company also unveiled its brand new $299 aRc Pro B50 with 16GB of memory, as well as 'Project Battlematrix' workstations with 24GB Arc Pro 60 GPUs.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Stephen is Tom's Hardware's News Editor with almost a decade of industry experience covering technology, having worked at TechRadar, iMore, and even Apple over the years. He has covered the world of consumer tech from nearly every angle, including supply chain rumors, patents, and litigation, and more. When he's not at work, he loves reading about history and playing video games.

-

Obsidian Blue This doesn’t seem particularly impressive, or am I missing something?Reply

The EPYC 9575F boosts many more than 8 cores beyond 5GHz under sustained load - so surely the EPYC CPU’s would still perform better as a GPU server CPU than these new Intel xeons?

Adding v expensive MRDIMM memory may help the Xeon but I doubt it would bridge the performance gap. -

thestryker Reply

While it's not in the text of the article the slide says PCT enables customizable turbo frequencies which I'd guess are not limited to the CPU's stock behavior.Obsidian Blue said:This doesn’t seem particularly impressive, or am I missing something?

The EPYC 9575F boosts many more than 8 cores beyond 5GHz under sustained load - so surely the EPYC CPU’s would still perform better as a GPU server CPU than these new Intel xeons?

Adding v expensive MRDIMM memory may help the Xeon but I doubt it would bridge the performance gap. -

thestryker Reply

No change from the rest of the line: Intel 3JRStern said:Did I miss where it says if these are 18a or made by TSM? -

TerryLaze Reply

Just as with the x3d CPUs, core counts and clocks aren't everything.Obsidian Blue said:This doesn’t seem particularly impressive, or am I missing something?

The EPYC 9575F boosts many more than 8 cores beyond 5GHz under sustained load - so surely the EPYC CPU’s would still perform better as a GPU server CPU than these new Intel xeons?

Adding v expensive MRDIMM memory may help the Xeon but I doubt it would bridge the performance gap.

I have no idea what these xeons provide, if anything, just saying that clocks are pretty low on the priority list for servers.

Maybe it's just because of the pci lanes and ram speed...

Intel's Xeon 6 CPUs include up to 128 P-cores per CPU and 20% more PCIe lanes than previous-generation Xeon processors, with up to 192 PCIe lanes per 2S server. Intel also claims Xeon 6 offers 30% faster memory speeds compared to the competition (specifically the latest AMD EPYC processors), thanks to Multiplexed Rank DIMMs (MRDIMMs) and Compute Express Link, and up to 2.3x higher memory bandwidth compared to the previous generation.

-

Obsidian Blue Reply

I'd beg to differ with regards to clock speeds on cores within GPU servers. It's been shown in lab tests that the 9575F can deliver 20% more tokens/sec in a 8xH100 GPU server (running Llama 3.1 - 70B @FP8) compared to an identical server with Intel Xeon 8592+. The 9575F was specifically designed to deliver high boost to a few cores since AI workloads tend not to be sustained and are more 'bursty' in their CPU demands - however the CPU's have to keep feeding the GPU's so when they're called on they need to complete their orchestration tasks as fast as possible.TerryLaze said:Just as with the x3d CPUs, core counts and clocks aren't everything.

I have no idea what these xeons provide, if anything, just saying that clocks are pretty low on the priority list for servers.

Maybe it's just because of the pci lanes and ram speed...

It will be interesting to see if Phoronix do some independent testing and compare the new Intel CPU's vs EPYC 9575F for GPU workloads -

TerryLaze Reply

Is this what every server is running?! Like the one and only thing?!Obsidian Blue said:I'd beg to differ with regards to clock speeds on cores within GPU servers. It's been shown in lab tests that the 9575F can deliver 20% more tokens/sec in a 8xH100 GPU server (running Llama 3.1 - 70B @FP8) -

Obsidian Blue Reply

Sorry, I'm not sure if I've understood the question. The testing was completed on individual servers and they were just running a Llama 3.1-70B inference benchmark.TerryLaze said:Is this what every server is running?! Like the one and only thing?!

There was also a publicly released test that ran a Llama 3.1-8B Training benchmark on 2 8xH100 GPU systems (9575F vs Xeon 8592+) and it shows a +15% training performance improvement with EPYC -

TerryLaze Reply

My question is that I have no idea how representative this is, or if the nvidia servers are made for this one benchmark.Obsidian Blue said:Sorry, I'm not sure if I've understood the question. The testing was completed on individual servers and they were just running a Llama 3.1-70B inference benchmark.

There was also a publicly released test that ran a Llama 3.1-8B Training benchmark on 2 8xH100 GPU systems (9575F vs Xeon 8592+) and it shows a +15% training performance improvement with EPYC

I'm just saying, maybe they bought the intel CPUs because they are running something else.